Trust & Transparency on AI - #CMUAIEthics

Trust is a central human relationship, and is critical for both human-human and human-machine interactions. At the same time, technological advances can threaten trust by, for example, making it harder for people to understand these new technologies. On this session Manuela Veloso, Moshe Y. Vardi, Kerstin Vignard and David Danks discussed “Trust & Transparency on AI” on the ways in which trust affects one another, and of the machines, how is it affected by technology, and how we can preserve the trust we want.

Each panelist answered: In your view, what is the most important or challenging aspect for trust in AI robotic technologies including in their development use?

Kersin Vignard: The implications of the weaponization of these technologies are particularly important, issues surrounding transparency and verification of algorithmic systems are among the most important when we consider increasing autonomy in weapons systems.

Moshe Y. Vardi: It’s important to recognize that we are in a situation right now of a trust crisis in AI.

Manuela Veloso: The key is to build these programs for which eventually there will be a change in society is to “justify” and have our problem solving algorithms generate such justifications as to why such solution was applied, and explain these on simple English language and not on the language it was built to whoever is requesting an explanation. These are just a part on what will enable us to build more trust on the use and development of our AI, Robotics and Machine Learning.

Watch a full recap of the session:

The final distinguished lecturer was Anca Dragan who spoke about "Transparency & Trustworthiness on AI and Robotics"

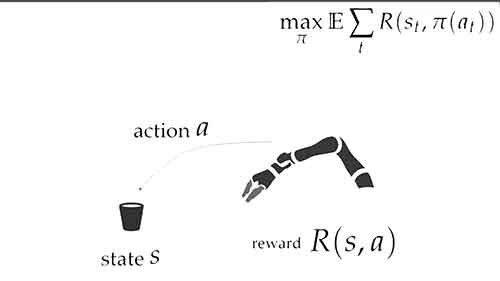

Robots take actions that change the state of the world, and usually how they choose to which actions to take is usually called a reward function or objective function to maximize cumulative reward.

How do we ensure alignment between the user’s mental model and the robot?

… We could work on the robot side to leverage trustworthiness or work on the human side to calibrate expectations to reality.

What techniques are used when a robot has to interact with multiple people?

One thing that we experimented with is that if the robot can learn from many people, when it sees a new person it should start from scratch, to allow customization of user experience, however we don’t want the robot to learn from scratch as there’s common things that are shared between users, so one way to look at this would be as to have the robot learn from its previous users, and then learn those new person’s actions maneuver and allow for individual customization.

Watch a recap from Anca Dragan’s session: